AI: A mountain of malinvestment?

2024 was a transformative year for AI and one of the best on record in terms of the number of companies exposed to AI-related themes, including standout performers like Nvidia (171.2%) and Broadcom (110.4%). However, as we enter 2025, it feels as though the market's perspective has shifted, and the risks inherent in AI investments are now grossly underestimated by many market participants.

Current valuations across various sectors of the market often reflect unrealistic future growth expectations. Furthermore, the capital required to develop newer, more advanced generative AI models has grown so exorbitantly large that one must question whether companies like Alphabet, Amazon, and Microsoft will ever recoup their investments.

This is not the only issue these companies face. According to The Wall Street Journal, OpenAI's highly anticipated GPT-5 project, codenamed "Orion," is encountering significant challenges with data availability. Despite over 18 months of development and substantial financial investment, multiple large-scale training runs have yielded disappointing results. GPT-5 has demonstrated limited performance gains despite immense computational costs. Each training run demands months of processing power and potentially costs hundreds of millions of dollars, making it critical to prove that the mountain of capital deployed for GPT-5 does not ultimately become a mountain of malinvestment.

The primary challenge in GPT-5's development is the scarcity of high-quality training data. While larger models generally exhibit improved capabilities, acquiring sufficient amounts of diverse and reliable data to train a model at GPT-5’s scale has proven to be exceptionally difficult. OpenAI is attempting to address this issue by generating synthetic data, such as human-written code and mathematical solutions, along with detailed explanations of the reasoning behind these solutions. This approach aims to enrich the model's learning experience and enhance its ability to solve complex problems. However, GPT-5 has frequently exhibited "hallucinations," where it provides factually incorrect or misleading information presented as fact.

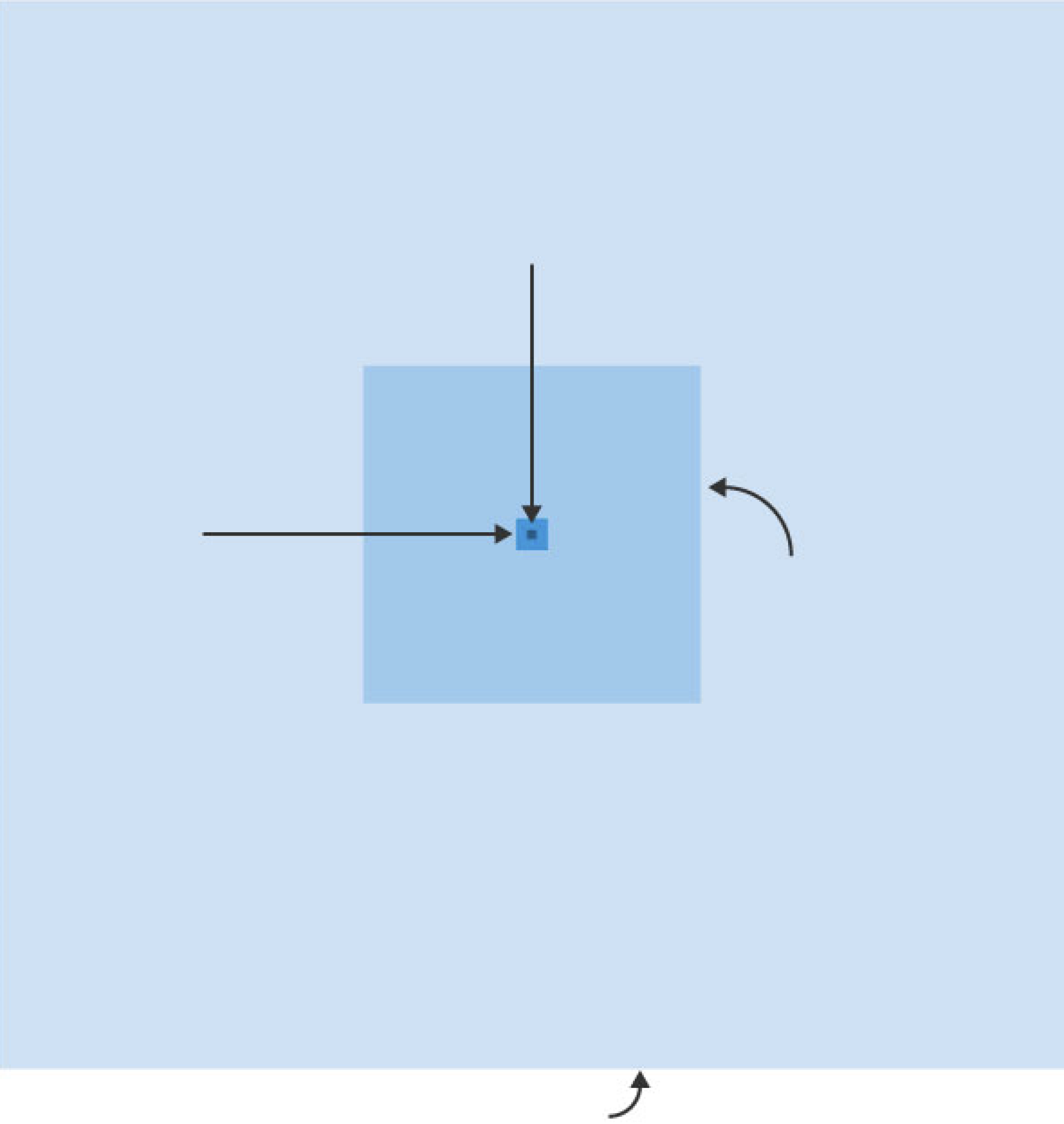

Number of parameters*, by GPT generation

GPT-1 117 million, GPT-2 1.5 billion, GPT-3 175 billion, GPT-4 1.76 trillion†

*Settings that determine how an AI processes information and makes decisions †Estimate Source: OpenAI (GPT-1, -2, -3); SemiAnalysis (GPT-4)

With AI revenues still virtually non-existent, the stakes for OpenAI and other companies to deliver breakthroughs with next-generation models like GPT-5 couldn't be higher. Yet, the project's current trajectory raises concerns—not only about GPT-5's feasibility and potential impact but also about the viability of many next-generation generative AI models in the short term. While solutions to the data scarcity issue may emerge in the near future, these challenges are not easily overcome. Achieving a level of performance that justifies the immense investment remains a critical hurdle for success in this space. Failure could reverberate throughout the supply chain, likely dampening future capital-raising efforts for companies like OpenAI.

These challenges are further exacerbated by a macroeconomic environment characterized by higher interest rates and tighter liquidity conditions. With a looming wave of corporate debt reissuance in 2026, OpenAI and similar companies may soon find themselves operating in a far less favorable fundraising environment, significantly altering the industry's dynamics. Architecture software—an area where Nvidia has demonstrated leadership—may benefit from such an environment as hyperscalers look to optimize hardware efficiency. However, this market remains highly fragmented, with hyperscaler companies like Meta supporting players such as AMD, which has struggled to keep pace with Nvidia.

After having little to no exposure to the primary beneficiaries of the AI trade during 2024, the evolving dynamics in the AI space have prompted us to reflect on our biases and consider where new trends and opportunities may emerge. While we remain open to the possibilities AI presents, we approach this space with caution, believing the real winners are likely to emerge in the application software layer, much like they did following the dot-com boom of the early 2000s. Healthcare technology, in particular, is an area where we are cautiously optimistic, given promising early applications of AI, especially in imaging and automation.